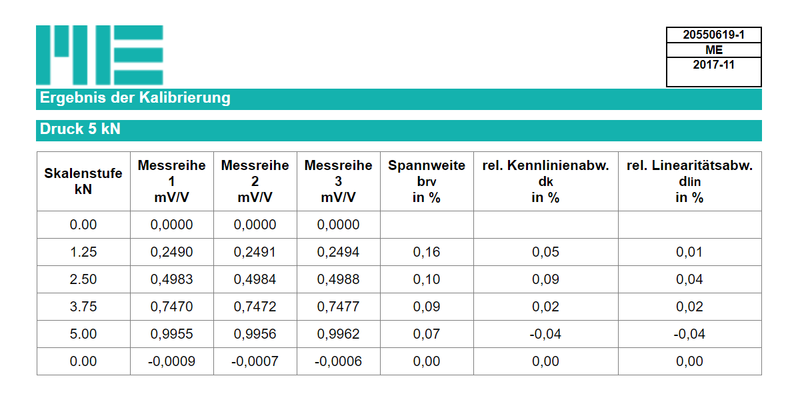

The data sheet for the KM40 load cell indicates an accuracy class of 0.5. In the present (representative) example, the range at 25% of the nominal load is 0.16% of 1.25 kN (of the actual value). Since the standard deviation cannot be calculated due to the small number of measured values, the amount of the difference between the maximum and minimum value of the three measured values is calculated in the calibration protocol, related to the actual value and shown as a percentage.

The KM40 force sensor can be classified into accuracy class 0.2 due to the range of 0.16% at the load level of 25%.

Another criterion for classification is the relative linearity deviation. At 0.04%, this is also significantly smaller than the accuracy class 0.2. The relative linearity deviation describes the maximum deviation of a force transducer characteristic curve determined with increasing force from the reference line, based on the final measuring range value used.

To determine the hysteresis, calibration would be required with increasing and decreasing load. A special case of hysteresis is the zero point return error (at 0% load). This is shown in the present calibration record and is less than 0.00% (beginning and end of the measurement series). Since the force sensor is made of high-strength spring steel, a systematic error is usually responsible for the hysteresis, e.g. the use of linear guides, insufficiently ground contact surfaces for the force sensor, storage of spring energy in accessories for force introduction, etc.

The temperature-related drift of the slope depends on the properties of the spring steel (decrease in the modulus of elasticity with increasing temperature) and on the properties of the strain gauge (increase or decrease in the k-factor with increasing temperature). These properties are known as systematic influences and are compensated well below 0.2%/10°C and therefore only need to be measured as part of a type approval or can even be derived from the technical data of the strain gauge.

For the force sensor to be classified in accuracy class 0.5, the temperature-related drift of the characteristic value (the gradient) should be less than 0.5%/10°C.

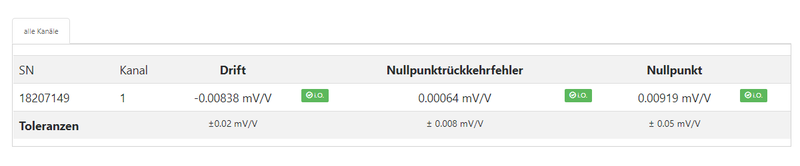

The temperature-related drift of the zero signal must be measured and compensated for each sensor individually.

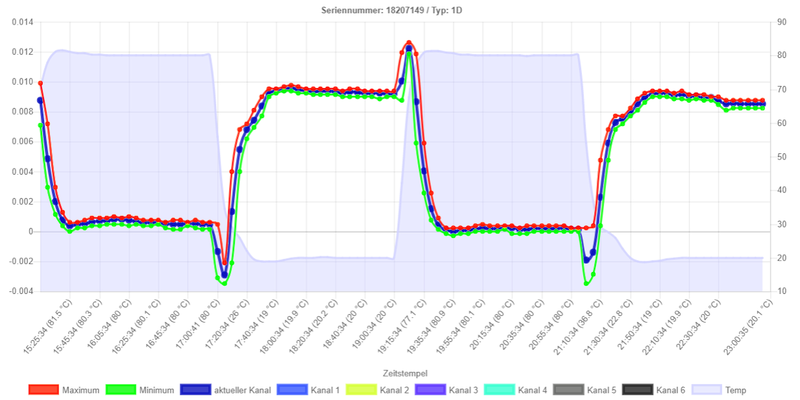

Fig. 2 shows the temperature-related drift of the zero signal for a KM40 5kN sensor: